(Header image under CC-BY by Gregory Varnum)

Hardware-backed voice assistants like Amazon Alexa and Google Assistant have received some criticism for their handling of voice data behind the scenes. The companies had outsourced quality control/machine learning feedback to external contractors who received voice recordings of user commands and were tasked to improve the assistants’ recognition of voice and intent. This came as a surprise to many users, who only expected their voice commands to be processed by automated systems and not listened to by actual humans.

It is worth noting that the user intent for their voice to be recorded and sent to the cloud was generally not called into question: While the devices listen all the time, their recording and sending only starts after they detect a so-called wake word: “Alexa” or “Hey Google”. There are scattered reports of accidental activation with similar sounds (“Alec, say, what’s up?”), but on balance this part of the system appears to be reasonably robust. Accidental activation is always mitigated by the fact that the devices clearly indicate their current mode: Recognition of the wake word triggers a confirmation sound and LEDs to light up.

New reporting shows how a malicious actor can get around this user intent in a limited fashion: Several sets of bugs in the system design allowed the assistant to stay awake and send recordings to the attacker even when a user might reasonably expect them not to be. It is important to note that these bugs are not remote-access vulnerabilities! User intent is still necessary to start the interaction, it’s just that the interaction lasts longer than the user expects. Also, none of the local safeguards against undected listening are impacted: The LEDs still light up and indicate an attentive assistant.

It is in the companies’ best interest to not be found spying on their users, and the easiest way to achieve that is by not doing it. Amazon, specifically, tries very hard to be seen as privacy-preserving because that enables additional services for them. Their in-home delivery service is absolutely dependent on consumers trusting them to open their doors for the delivery driver (who in turn is instructed not to actually enter the home, but just drop the package right on the other side of the door, and is filmed doing so). Amazon demonstrates their willingness to at least appear privacy-respecting on other fronts too: The microphone-off button on the Amazon Echo devices cuts power to the microphone array and lights up a “mute” LED: it’s impossible to turn on the LED under software control. When the LED is on, the microphone is off.

The primary concern still is an issue of trust: Do I trust the device to only record and transmit audio when I intend for it to do so? In theory the device manufacturer could have the device surreptitiously record everything. There’s no easy way to audit either the device or its connections to the outside world. Some progress has been made to extract and analyse device firmware, but ultimately this cannot rule out a silent firmware update with listening capabilities at a later date.

An Auditing System to Confirm User Intent

This essay proposes a system in which users can gain confidence that they are not surreptitiously monitored, without requiring a device manufacturer to give up any of their proprietary secrets. It assumes cooperation on the part of the manufacturer and a certain technical expertise on the part of at least some of the users.

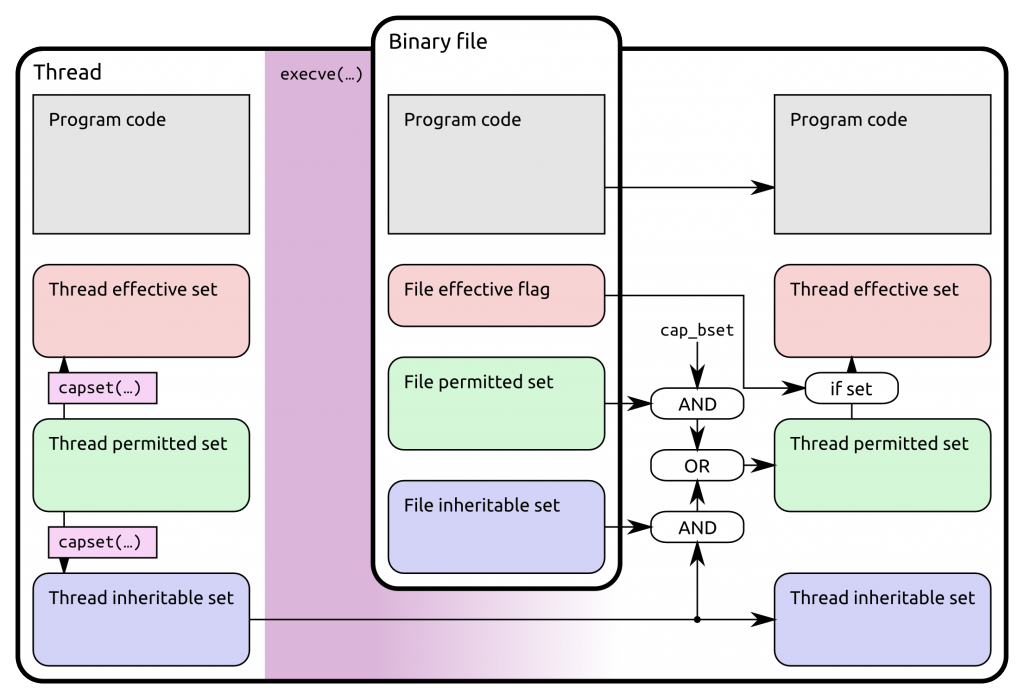

Step 1: The manufacturer augments their back-end systems to log device activity and TLS session keys, and keeps these logs for a certain number of days.

Step 2: The end user passively records all incoming and outgoing traffic from the device. Obviously only a small percentage of end users will be able to do that and only a fraction of those will actually record the traffic. But since the manufacturer cannot be sure which devices are being monitored, they risk detection if they tamper with any of them.

Step 3: The user requests a list of sessions keys from the manufacturer and uses it to decrypt the captured connections.

Step 4: The manufacturer provides a machine-readable list of activities of the device, both user-initiated (such as queries to a voice assistant) and automated (such as firmware updates, or normal device telemetry).

Step 5: Analysis software matches the list of device activities to the recorded connections and flags any suspicious activity. The software should be open source, initially provided by the manufacturer, and be extensible by the community at large.

Step 6: The user can cross-check the now-vetted list of device activities to confirm whether it matches their intent.

The most impractical step is number 2: Only few users would bother to configure their networks in a way that allows the device traffic to be monitored. However, I believe that even the possibility of monitoring should deter malicious behaviour. This step is also most easily supported by third-party tools: An OpenWRT extension for example would greatly simplify the recording for users of OpenWRT, and other CPE manufacturers could follow suit1.

The IoT device manufacturer may want to keep some data — such as firmware update files, or received audio streams — proprietary and secret. They must do so in a manner that allows the analysis tool to confirm that only downstream data is withheld: Either by using a separate, at-rest, encryption layer inside the TLS connection, or by using a separate TLS connection to a special endpoint which carries only the absolutely minimal amount of information (one small HTTP request) in the upstream direction. The analysis tool is then able to ignore the contents of this proprietary data while still being able to flag anomalies in the meta data (“Three 150MB firmware updates in a day? Really?”).

Rationale: A scheme that forcibly opens up firmware files or DRM’ed audio streams would be a non-starter for industry adoption. Decrypting this downstream content isn’t necessary for the goal of confirming user intent. Conversely all information carried on the upstream channel by definition belongs to the user, since they generated it. (If they didn’t generate it, it wouldn’t need to be transferred.)

Potential for abuse: When suggesting to store new kinds of data (step 1) it’s important to analyze the potential for abuse this data has, be it from law-enforcement agencies or from vengeful ex-partners. I believe no new threat is introduced here: The current backends of manufacturers with voice assistants already store voice recordings and generally give the option to look at the device history or download recordings (both to LEAs and to anyone with account access). The data recorded in step 1 should give no additional insight into the user behaviour beyond what is already recorded under the status quo — except that it allows to confirm the completeness of the log.

This opens up the question on whether one trusts their CPE manufacturer to build correct logging and to not collude with the IoT device manufacturer. ↩